This source is discrete as it is not considered for a continuous time interval, but at discrete time intervals. Discrete Memoryless SourceĪ source from which the data is being emitted at successive intervals, which is independent of previous values, can be termed as discrete memoryless source. It is denoted by C and is measured in bits per channel use. The maximum average mutual information, in an instant of a signaling interval, when transmitted by a discrete memoryless channel, the probabilities of the rate of maximum reliable transmission of data, can be understood as the channel capacity. We have so far discussed mutual information. $$H\left ( x\mid y_k \right ) = \sum_ \right )$$ To know about the uncertainty of the output, after the input is applied, let us consider Conditional Entropy, given that Y = y k (This is assumed before the input is applied) Let the entropy for prior uncertainty be X = H(x) Let us consider a channel whose output is Y and input is X It is denoted by $H(x \mid y)$ Mutual Information The amount of uncertainty remaining about the channel input after observing the channel output, is called as Conditional Entropy. Hence, this is also called as Shannon’s Entropy. Shannon defined the quantity of information produced by a source-for example, the quantity in a message-by a formula similar to the equation that defines thermodynamic entropy in physics. Where p i is the probability of the occurrence of character number i from a given stream of characters and b is the base of the algorithm used. Claude Shannon, the “father of the Information Theory”, provided a formula for it as − When we observe the possibilities of the occurrence of an event, how surprising or uncertain it would be, it means that we are trying to have an idea on the average content of the information from the source of the event.Įntropy can be defined as a measure of the average information content per source symbol. The difference in these conditions help us gain knowledge on the probabilities of the occurrence of events.

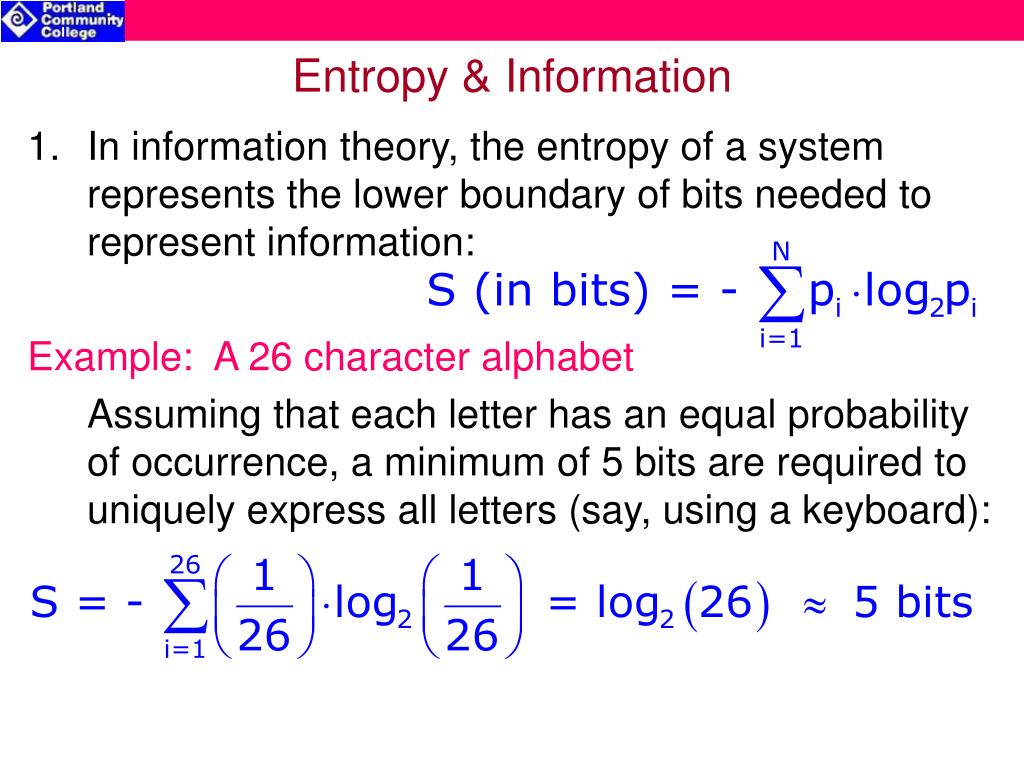

These three events occur at different times. If the event has occurred, a time back, there is a condition of having some information. If the event has just occurred, there is a condition of surprise. If the event has not occurred, there is a condition of uncertainty. If we consider an event, there are three conditions of occurrence. Information theory is a mathematical approach to the study of coding of information along with the quantification, storage, and communication of information. This information would have high entropy.Information is the source of a communication system, whether it is analog or digital. This information would be very valuable to them. The relative entropy, D(pkqk), quantifies the increase in the average number of units of information needed per symbol if the encoding is optimized for the. If they were told about something they knew little about, they would get much new information. This information would have very low entropy. It will be pointless for them to be told something they already know. If someone is told something they already know, the information they get is very small. If there is a 100-0 probability that a result will occur, the entropy is 0. It does not involve information gain because it does not incline towards a specific result more than the other. In the context of a coin flip, with a 50-50 probability, the entropy is the highest value of 1. The information gain is a measure of the probability with which a certain result is expected to happen. It has applications in many areas, including lossless data compression, statistical inference, cryptography, and sometimes in other disciplines as biology, physics or machine learning. The "average ambiguity" or Hy(x) meaning uncertainty or entropy. It measures the average ambiguity of the received signal." "The conditional entropy Hy(x) will, for convenience, be called the equivocation. Information and its relationship to entropy can be modeled by: R = H(x) - Hy(x) The concept of information entropy was created by mathematician Claude Shannon. More clearly stated, information is an increase in uncertainty or entropy. In general, the more certain or deterministic the event is, the less information it will contain. It tells how much information there is in an event. Information entropy is a concept from information theory.

0 kommentar(er)

0 kommentar(er)